Understanding How often is ai wrong.

How often is AI wrong? As artificial intelligence becomes more integrated into daily life, questions about its accuracy and reliability are more important than ever. AI systems are designed to process vast amounts of data, but errors still occur due to biases, incomplete information, and algorithmic limitations. Understanding how often AI makes mistakes and the reasons behind these errors can help businesses and individuals make informed decisions when using AI-powered tools.

The Nature of AI Mistakes

AI mistakes occur when an algorithm produces unintended, incorrect, or suboptimal outcomes. These errors are often the result of limitations in training data, flawed algorithms, or misinterpretation of user input.

AI systems rely heavily on data patterns, and their “understanding” is mathematical rather than intuitive. This makes them prone to specific types of errors:

- Overfitting and Underfitting:

- Overfitting occurs when an AI model learns patterns too specifically, causing it to perform well on training data but poorly on new data.

- Underfitting happens when the model fails to learn enough from the data, leading to general inaccuracies.

- Bias in Decision-Making:

- AI models trained on biased data replicate and amplify those biases, leading to unethical or discriminatory outcomes.

- Lack of Contextual Understanding:

- AI systems struggle with nuanced or ambiguous inputs, often resulting in inappropriate responses.

Common Types of AI Errors

AI errors manifest in various forms, often influenced by the application and context. Below are some of the most prevalent types:

- Data-Driven Errors:

- Insufficient or poor-quality data can lead to incomplete learning. For instance, AI predicting customer behavior might fail if the dataset lacks diversity.

- Data drift, where real-world conditions change over time, can render trained models obsolete.

- Logical and Computational Errors:

- Errors in algorithm design, such as incorrect logic or flawed assumptions, can cause systemic inaccuracies.

- For example, a chatbot might misinterpret user sentiment due to poor sentiment analysis algorithms.

- Perception Errors:

- Vision-based AI systems (e.g., facial recognition) often fail to identify individuals correctly under low-light or diverse ethnic conditions.

- Natural Language Processing (NLP) models might misinterpret idiomatic expressions or sarcasm.

- Autonomous Decision-Making Flaws:

- Autonomous systems, such as self-driving cars, might misread traffic signals or fail to account for unexpected obstacles, causing safety hazards.

- Misinformation Generation:

- AI-generated content, such as deepfakes or fabricated text, poses significant ethical and practical challenges.

- Interpretability Issues:

- Many AI systems operate as “black boxes,” making it difficult for users to understand why certain decisions were made. This lack of transparency can lead to distrust and oversight failures.

Why Addressing AI Errors Matters

Understanding and addressing these errors is critical because:

- Consumer Trust: Users must trust AI systems for adoption to increase.

- Ethical Concerns: Eliminating bias ensures fair and equitable outcomes.

- Safety: In high-stakes applications like healthcare or autonomous driving, errors can have life-threatening consequences.

Causes of AI Mistakes

AI mistakes can undermine the effectiveness and reliability of artificial intelligence systems. To minimize these errors, it’s essential to understand the factors that contribute to them and the inherent limitations of current AI models. This knowledge allows for targeted improvements and fosters trust in AI technologies.

Try now Error free top AI content Generation Tool

Factors Contributing to AI Errors

The sources of AI errors often lie in the way these systems are designed, trained, and implemented. Below are the primary factors responsible for inaccuracies:

- Data Quality and Quantity:

- AI models are only as good as the data used to train them. Insufficient, incomplete, or low-quality datasets can lead to inaccurate predictions and poor decision-making.

- Example: An AI model trained on a dataset with underrepresented groups may fail to make equitable predictions.

- Bias in Training Data:

- If the training data contains inherent biases, the AI model will replicate and amplify those biases.

- Example: Facial recognition software has been found to perform poorly on darker skin tones due to a lack of diversity in training datasets.

- Overfitting and Underfitting:

- Overfitting occurs when the model learns specific details in the training data, making it less generalizable to new data.

- Underfitting happens when the model fails to capture patterns in the training data, resulting in suboptimal performance.

- Human Error in Algorithm Design:

- Mistakes made during the creation of algorithms, such as incorrect assumptions or logical flaws, can propagate errors throughout the AI system.

- Environmental Factors:

- Real-world applications often encounter unexpected variables, such as noise, lighting changes, or sensor malfunctions, which can lead to AI mistakes.

- Interpretation Challenges:

- Miscommunication or misunderstanding of user input can cause AI systems to interpret instructions incorrectly, leading to errors.

Limitations of Current AI Models

While AI has advanced significantly, current models have notable limitations that contribute to errors:

- Lack of Contextual Understanding:

- AI models struggle to comprehend nuances, such as cultural context, sarcasm, or idiomatic expressions.

- Example: A chatbot might misinterpret a phrase like “break a leg” as a literal command instead of a good-luck wish.

- Dependence on Static Training Data:

- Many AI systems are trained on static datasets and cannot adapt to evolving real-world scenarios without retraining.

- Example: Predictive models in e-commerce may fail to adjust to seasonal trends without new data.

- Black Box Nature of AI Models:

- Many AI systems, especially deep learning models, operate as “black boxes,” meaning their decision-making processes are not transparent. This lack of explainability makes it difficult to identify and correct errors.

- Resource-Intensive Training Requirements:

- Training advanced AI models requires significant computational power and resources, which limits the ability to fine-tune them frequently.

- Ethical and Legal Constraints:

- Ethical considerations, such as privacy concerns and data ownership, often restrict the availability of high-quality training data, impacting model accuracy.

- Limitations in Generalization:

- AI models excel at specific tasks but struggle with tasks outside their domain or those requiring creative problem-solving.

Real-World Impacts of AI Errors

AI systems have transformed industries and daily life, but their mistakes can result in profound consequences. From misinformation to life-threatening errors in critical systems, understanding the real-world impacts of AI errors is essential for mitigating risks and fostering responsible use.

Misinformation and Public Perception

One of the most significant impacts of AI errors is the spread of misinformation. AI-powered systems, such as content generators and recommendation engines, can inadvertently promote false or misleading information.

- Amplification of False Information:

- AI algorithms often prioritize engagement over accuracy, leading to the widespread dissemination of incorrect data.

- Example: Social media platforms using AI to recommend posts might inadvertently spread fake news or conspiracy theories.

- Erosion of Trust:

- When users encounter repeated AI errors, such as incorrect suggestions or misleading outputs, it diminishes trust in AI systems and the organizations deploying them.

- Challenges in Fact-Checking:

- AI-generated content, such as deepfakes and fabricated news articles, complicates efforts to distinguish between real and fake information.

Effects on Daily Decisions

AI plays a crucial role in everyday decision-making, from what we buy to how we commute. Errors in these systems can lead to inconvenience, financial losses, or even safety concerns.

- Impact on Consumer Behavior:

- AI recommendations in e-commerce may mislead customers by promoting irrelevant or low-quality products due to flawed algorithms.

- Example: A recommendation system suggesting inappropriate items based on misunderstood preferences.

- Navigation and Travel:

- Errors in AI-powered navigation apps can misroute users, causing delays or directing them to unsafe areas.

- Example: Drivers relying on AI GPS being led to inaccessible or hazardous routes.

- Healthcare Decisions:

- AI-powered healthcare applications may provide inaccurate diagnoses or treatment suggestions, potentially risking patient safety.

Catastrophic Failures in Critical Applications

In high-stakes applications, AI errors can lead to severe consequences, including financial losses, safety hazards, and even loss of life.

- Autonomous Vehicles:

- Errors in AI systems governing autonomous vehicles can lead to accidents, as seen in cases where vehicles failed to detect pedestrians or misinterpreted road conditions.

- Industrial Automation:

- AI errors in automated manufacturing systems can cause production halts, defective products, or equipment damage.

- Military and Defense Applications:

- Missteps in AI-powered defense systems, such as autonomous drones, could result in unintended casualties or escalated conflicts.

- Financial Systems:

- AI algorithms in trading or credit scoring can make erroneous decisions, causing market instability or unfair lending practices.

The Human Cost of AI Mistakes

The rise of artificial intelligence has brought incredible advancements, but its errors come with human costs that extend beyond technical failures. From ethical dilemmas to financial impacts, understanding these costs is essential for creating AI systems that are equitable, safe, and reliable.

Social and Ethical Implications

AI mistakes often raise profound social and ethical concerns, particularly when these errors disproportionately impact vulnerable populations.

- Reinforcement of Bias:

- AI systems trained on biased data can perpetuate or amplify societal inequalities.

- Example: Recruitment algorithms that favor certain demographics based on historical hiring data can exclude qualified candidates from minority groups.

- Privacy Violations:

- Errors in AI-driven surveillance systems can lead to breaches of personal privacy, impacting civil liberties.

- Example: Misidentification of individuals in facial recognition software used by law enforcement.

- Loss of Human Agency:

- Over-reliance on AI in decision-making can diminish human autonomy, leaving critical judgments to systems prone to errors.

- Example: Healthcare systems making incorrect treatment recommendations without human oversight.

- Ethical Dilemmas in Automation:

- The displacement of jobs due to AI automation raises ethical questions about the social responsibility of corporations and governments.

Legal and Economic Repercussions

The financial and legal fallout from AI mistakes can have far-reaching consequences, impacting businesses, governments, and individuals alike.

- Legal Accountability:

- Determining liability for AI errors is a complex legal challenge, especially in autonomous systems like self-driving cars.

- Example: In accidents involving autonomous vehicles, it’s often unclear whether the manufacturer, software developer, or user is responsible.

- Economic Disruptions:

- Incorrect predictions or decisions by AI systems in critical sectors, such as finance, can lead to massive financial losses.

- Example: Faulty trading algorithms triggering stock market instability.

- Cost of Rectification:

- Fixing errors in AI systems can be expensive, particularly in large-scale applications like industrial automation or healthcare.

- Unfair Economic Outcomes:

- AI systems making inaccurate credit scoring or loan approval decisions can result in financial exclusion for individuals who may otherwise qualify.

Broader Consequences

- Public Distrust:

- Widespread AI errors can erode trust in institutions that deploy them, affecting user adoption and confidence in the technology.

- Global Inequities:

- Errors in AI systems used globally, such as those in healthcare or education, can deepen inequalities between developed and developing nations.

Mitigating AI Errors

As AI continues to integrate into critical systems and everyday life, reducing errors is essential to ensure reliability and trustworthiness. By improving accuracy and adopting risk-reduction strategies, we can address the vulnerabilities of AI systems and their impact on users and industries.

Advances in AI Accuracy

Continuous innovations in AI technology are driving improvements in model accuracy, making systems more reliable and effective. Below are some notable advances:

- Enhanced Data Collection and Preprocessing:

- High-quality, diverse datasets are crucial for training AI models. Advances in data collection techniques and preprocessing methods ensure that training data better represents real-world scenarios.

- Example: Leveraging synthetic data to augment datasets for underrepresented demographics improves model fairness.

- Transfer Learning and Pretrained Models:

- Transfer learning allows AI to apply knowledge from one domain to another, reducing the likelihood of errors when working with limited data in new contexts.

- Example: A language model pretrained on extensive multilingual data performs better in regional language translation tasks.

- Model Optimization Techniques:

- Techniques like pruning, quantization, and regularization help streamline AI models, reducing overfitting and improving performance on diverse data inputs.

- Explainable AI (XAI):

- Making AI decisions transparent helps developers and users identify errors in logic or predictions, facilitating corrections.

- Example: XAI tools can visually map how an AI system reached a conclusion, helping users spot anomalies.

- Continuous Learning Systems:

- AI systems equipped with continuous learning capabilities adapt to real-time changes, reducing errors caused by outdated models.

- Example: Recommendation systems that adjust preferences based on user behavior over time.

Strategies for Risk Reduction

Reducing AI errors involves proactive strategies that address risks throughout the system’s lifecycle.

- Robust Testing and Validation:

- Comprehensive testing under various conditions ensures that AI systems perform reliably across scenarios.

- Example: Simulating edge cases, such as rare medical conditions in AI diagnostic tools, to evaluate performance in high-stakes situations.

- Human Oversight and Intervention:

- Combining AI automation with human judgment minimizes the impact of errors, particularly in critical applications.

- Example: Autonomous vehicles incorporating driver override systems in case of malfunction.

- Bias Detection and Mitigation:

- Advanced algorithms for bias detection help ensure that AI systems make decisions equitably.

- Example: AI systems used in hiring processes screened for discriminatory patterns in training data.

- Ethical Guidelines and Regulations:

- Adopting industry-wide ethical standards and government regulations ensures accountability and minimizes harm caused by AI errors.

- Domain-Specific AI Models:

- Narrowing AI’s focus to specific domains or tasks reduces the likelihood of errors by simplifying problem scopes.

- Example: AI systems tailored for medical imaging are more accurate than generalized diagnostic tools.

How to Ensure AI Truthfulness

Ensuring the truthfulness of AI systems is a critical goal in developing responsible and reliable technology. AI’s effectiveness lies not only in its ability to process vast amounts of data but also in its capacity to generate accurate and unbiased information. Below are actionable strategies to achieve this.

The Role of Human Oversight

Human oversight is an essential component in maintaining AI truthfulness, ensuring that automated systems are both accurate and ethical.

- Monitoring AI Outputs:

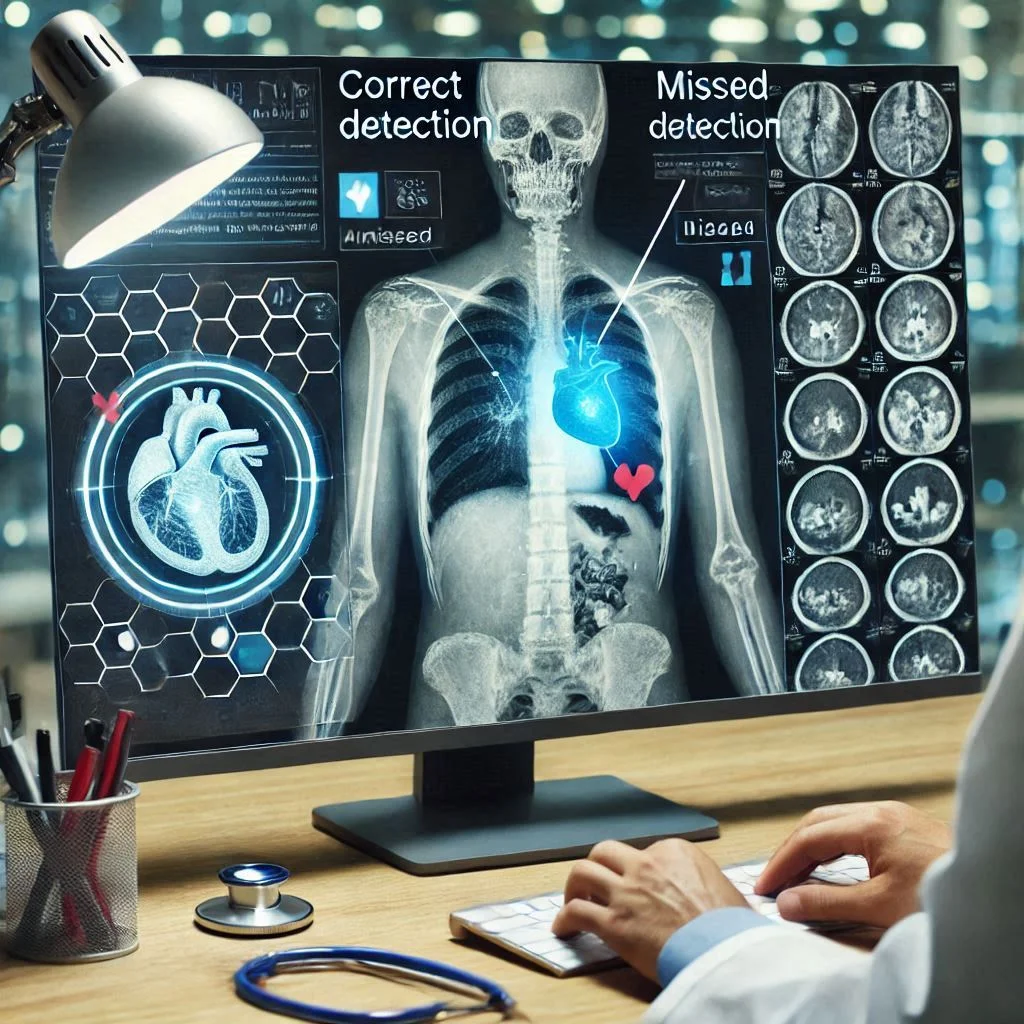

- Human operators review AI decisions, especially in high-stakes scenarios such as healthcare or legal applications.

- Example: Radiologists verifying AI-detected abnormalities in medical scans.

- Collaborative Decision-Making:

- Combining human intuition with AI’s analytical power leads to more balanced and accurate results.

- Example: AI-powered legal tools offering case analyses, with lawyers making the final decisions.

- Ethical Guidance:

- Human oversight helps enforce ethical guidelines, ensuring AI systems adhere to societal norms and values.

Cross-Referencing and Verification

Cross-referencing and verifying AI-generated outputs with reliable sources minimizes misinformation and enhances trustworthiness.

- Multi-Source Verification:

- AI systems are programmed to compare data across multiple trusted sources before presenting information.

- Example: AI fact-checking tools using news archives and academic databases to confirm the validity of statements.

- Feedback Mechanisms:

- Incorporating user feedback allows systems to identify and correct errors, ensuring continual improvement.

- Example: A chatbot updated based on user reports of inaccurate responses.

- Third-Party Audits:

- Independent evaluations of AI models help uncover biases and inaccuracies, fostering transparency and accountability.

Using Domain-Specific AI Models

Specialized AI models tailored for specific industries or tasks are more effective at generating truthful and relevant outcomes.

- Focused Training Data:

- Domain-specific AI models are trained on datasets relevant to their intended application, improving precision.

- Example: AI tools for financial analysis trained on market data instead of general datasets.

- Minimized Ambiguity:

- Narrowing the scope of AI reduces the chances of errors caused by handling unrelated or overly broad information.

- Example: AI in agriculture focused exclusively on crop disease detection instead of generalized environmental analytics.

- Improved Accuracy in Specialized Tasks:

- Tailored AI models outperform general-purpose systems when applied to complex niche problems.

Understanding AI Limitations

Recognizing and addressing AI’s limitations is vital for ensuring it remains truthful and effective.

- Inherent Constraints:

- AI systems lack contextual understanding and rely solely on training data. Acknowledging these limitations prevents overestimating AI’s capabilities.

- Example: Chatbots unable to comprehend sarcasm or nuanced language.

- Transparency in AI Development:

- Openly communicating AI’s limitations to users builds trust and helps set realistic expectations.

- Example: Notifying users when an AI’s recommendations are probabilistic rather than definitive.

- Avoiding Over-Reliance:

- Balancing AI use with human judgment prevents critical decisions from being solely based on potentially flawed algorithms.

How Often Is AI Wrong

Understanding the frequency of AI errors is crucial for assessing its reliability across various applications. While AI has significantly advanced in accuracy, errors persist due to inherent limitations, data quality issues, and contextual challenges. Let’s delve into how often AI makes mistakes across different domains and the factors influencing its accuracy.

Frequency of Errors Across Different Domains

The frequency of AI errors varies widely depending on the domain, the complexity of tasks, and the quality of the training data.

- Healthcare

- AI is used for diagnostics, treatment recommendations, and medical imaging.

- Error Rate: While AI models like radiology algorithms can achieve 90-95% accuracy, they still miss critical abnormalities in 5-10% of cases.

- Example: AI misdiagnosing rare diseases due to insufficient representation in training datasets.

- Finance

- AI powers fraud detection, trading algorithms, and credit scoring.

- Error Rate: Fraud detection AI has error rates of 1-3%, but this small margin can still result in significant financial consequences.

- Example: False positives in fraud detection blocking legitimate transactions.

- Autonomous Vehicles

- AI controls navigation, obstacle detection, and decision-making in self-driving cars.

- Error Rate: Accident rates for autonomous vehicles are lower than human drivers but still occur in 1-2% of complex scenarios.

- Example: Failing to correctly interpret road signs or unusual pedestrian behavior.

- E-Commerce

- AI is used in recommendation engines, chatbots, and inventory management.

- Error Rate: AI gets product recommendations wrong 10-20% of the time, often leading to poor user experiences.

- Example: Suggesting irrelevant items based on limited purchase history.

- Natural Language Processing (NLP)

- AI chatbots, translation services, and virtual assistants rely on NLP.

- Error Rate: Errors in contextual understanding occur in 15-25% of conversations, especially with ambiguous queries.

- Example: Misinterpreting user intent in multi-language interactions.

Factors Affecting AI Accuracy Rates

AI’s accuracy rates depend on a range of factors, from data quality to the complexity of the task. Understanding these factors is vital for improving performance.

- Data Quality and Quantity

- AI models are only as good as the data they are trained on. Incomplete, biased, or noisy datasets significantly increase error rates.

- Example: Training an AI translation model on limited or outdated text samples leads to poor language interpretation.

- Model Complexity

- Overly complex models risk overfitting, while oversimplified models fail to capture intricate relationships in data.

- Example: General-purpose AI models underperform compared to specialized models designed for a single domain.

- Environmental Variability

- Changes in real-world conditions, such as lighting or context, can lead to misinterpretation by AI.

- Example: Facial recognition AI failing in low-light environments.

- Human Interaction

- Errors often stem from unclear instructions or poorly framed queries by users.

- Example: A virtual assistant misinterpreting ambiguous prompts like “Book a table for five.”

- Algorithm Bias

- Bias in training datasets can skew AI predictions, leading to higher error rates for underrepresented groups.

- Example: AI models trained predominantly on Western accents struggling with diverse dialects.

FAQs

AI systems are rapidly transforming industries and daily life, but questions about their errors, impacts, and legal implications remain critical. This FAQ section addresses common concerns, providing clear and actionable insights to help users understand and navigate the complexities of AI.

What is the most common type of error made by AI?

The most frequent errors made by AI systems include classification mistakes and contextual misinterpretations.

- Classification Errors:

- AI often misclassifies data, such as identifying objects incorrectly in images or assigning the wrong label in predictive models.

- Example: An AI-powered facial recognition system misidentifying individuals due to poor lighting conditions or diverse ethnic features.

- Contextual Misinterpretations:

- Language models and chatbots struggle to understand nuanced or ambiguous queries.

- Example: Misinterpreting a sarcastic remark as a factual statement, leading to an inaccurate response.

- False Positives and Negatives:

- False positives occur when AI identifies something that isn’t there, while false negatives fail to recognize something that is.

- Example: Fraud detection systems blocking legitimate transactions as potential fraud (false positive).

How do AI errors impact everyday life?

AI errors affect daily life in subtle and significant ways, influencing decision-making, convenience, and trust.

- Reduced User Experience:

- Inaccurate recommendations in e-commerce or streaming platforms can lead to user dissatisfaction.

- Example: Suggesting irrelevant products or movies based on limited user data.

- Financial Consequences:

- Errors in financial tools, such as loan approvals or trading algorithms, can result in monetary losses or denied access to critical resources.

- Erosion of Trust:

- Frequent mistakes in AI-driven applications, such as chatbots or virtual assistants, reduce user trust in the technology.

- Example: A virtual assistant providing incorrect directions or misinterpreting commands.

- Social Implications:

- AI inaccuracies in social media platforms can amplify misinformation, affecting public perception and discourse.

Can AI errors be completely eliminated?

It is highly unlikely that AI errors can be entirely eradicated due to several inherent challenges:

- Dependence on Imperfect Data:

- AI systems rely on data, which is often incomplete, biased, or noisy. Perfect data is unattainable, making occasional errors inevitable.

- Complexity of Real-World Scenarios:

- AI cannot account for every edge case or unexpected scenario, especially in dynamic environments.

- Example: Autonomous vehicles encountering rare road conditions like animal crossings in unusual locations.

- Human-Like Limitations:

- AI, like humans, will always face limitations in processing ambiguous or contradictory information.

- Continuous Evolution:

- As systems evolve and adapt, errors from new contexts or updates are likely to occur, requiring ongoing refinement.

What are the legal implications of AI mistakes?

AI errors raise complex legal questions about accountability, liability, and regulatory compliance.

- Determining Responsibility:

- Who is liable for an AI’s mistake? Developers, manufacturers, or users? This depends on the context and existing laws.

- Example: A self-driving car causing an accident raises questions about manufacturer liability versus user responsibility.

- Regulatory Frameworks:

- Governments are working to create legal frameworks to address AI accountability. These include regulations for data privacy, fairness, and transparency.

- Economic Repercussions:

- AI errors in business can lead to contractual disputes or financial losses, requiring legal resolution.

- Example: An AI-driven stock trading algorithm causing significant losses due to a miscalculated prediction.

How can the public protect themselves from AI misinformation?

Public awareness and proactive measures can mitigate the risks of AI-driven misinformation:

- Verify Information:

- Always cross-check AI-generated content with credible sources to ensure accuracy.

- Understand Limitations:

- Recognize that AI, such as chatbots or search algorithms, can provide incomplete or biased information.

- Use Trusted Platforms:

- Rely on reputable tools and platforms known for their accuracy and reliability.

- Stay Educated:

- Learn about how AI works and its potential for misinformation to make informed decisions

Conclusion

Artificial Intelligence (AI) is undeniably reshaping industries, streamlining processes, and enhancing user experiences, yet it is not without its challenges. Understanding the causes, impacts, and mitigation strategies for AI errors is crucial in leveraging its full potential responsibly. From addressing misinformation and minimizing real-world risks to ensuring ethical usage and legal accountability, a balanced approach is key. While AI errors cannot be entirely eliminated, advances in technology, human oversight, and domain-specific applications are paving the way for more accurate and trustworthy systems. By staying informed, vigilant, and proactive, we can navigate the complexities of AI, turning its challenges into opportunities for innovation and societal growth. The future of AI is promising, but its success depends on our collective responsibility to harness it wisely.